Take your toolbox with you

Previously I posted about two ways to get SysInternals to your machine, well that great bunch have released yet another method! This time in a download package which contains all the tools and none of the fluff (so no BSOD screen saver)! This is a great addition for your toolbox! You can get it from here.

Thanks to Sarah for the heads up on this!

Dev4Devs!

Earlier this year I attended a great event called Dev4Devs which is run by Microsoft on a Saturday morning at their offices and invites any developer to get up and present for 20mins! This is a great introduction into a lot of things as well as a great place to start if you want to become a presenter. Well it’s happening again in October! I will definitely be there so head over to Eben’s blog for more details.

Microsoft CRM on Linux!

The main issue is that for MSCRM to work it needs Internet Explorer and it needs IE because of the million lines of JavaScript that exist and a lot of it makes use of MSXML which is not available cross platform.

Henk did some magic and found a program/tool/package called IE4Linux! What is IE4Linux?:

IEs4Linux is the simpler way to have Microsoft Internet Explorer running on Linux (or any OS running Wine). No clicks needed. No boring setup processes. No Wine complications. Just one easy script and you'll get three IE versions to test your Sites. And it's free and open source.

They mention three versions which are 5.0, 5.5 and 6.0, however 7.0 is in beta. Since MSCRM needs 5.5 or higher it just might work! Henk did his magic to get it working and I did mine (which was very easy, just making sure my VM is running and getting the firewall configured) and without much fuss he got Microsoft CRM running on Linux!!! He has promised a whole post on IE4Linux so if you want to know more please watch his site, otherwise enjoy the pictures taken from his Linux box below of MSCRM!

Hyper-V Shrinking a VHD

Virtualisation is the way of the future, be it for demos or testing or production systems it is the future, and that means VHD files will be everywhere. However VHD files grow and grow and never shrink because of the way they are designed to work. For example if you put a 10Gb file on a VHD, it expands the VHD by 10Gb (for the disk) but if you delete a file the space of the VHD isn't reclaimed automatically.

This is pretty much ok for production systems (your VHDs live on a SAN with lots of disk or they should do for a lot of good reasons).

However if it's on your laptop for training/R&D or sending to customers being able to claim that disk space can be valuable and the advantage is it can be done manually so let me show you how it can be done in Hyper-V.

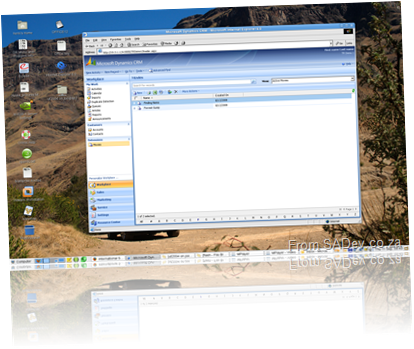

For this post I have a VHD which contains a MSSQL database (MDF and LDF) file on it, the disk space usage on the VHD for the drive looks like this:

So the VHD is supposedly a max of 300Gb and I have never copied that much on to it, and at the moment it contains a simple 53Gb and some change on it. The actual VHD file on my laptop looked like this, using a nice 117Gb of real disk space. So there is at least 64Gb I could get back!

The first step in shrinking the disk is to defrag it on the virtual machine, as the shrinking process only cleans space from the end of the disk. So if you have any data at the end like I did (see below) you'll need to (re)move it. Unfortunately as you can see I had an unfortunate "unmovable" piece of data conveniently at he end of the disk. Thankfully it turned out to be the LDF file for the SQL database.

So a quick truncate/empty of the LDF file (don't do that in production, but if you want to know how I did it see here) made it a lot easier to defrag the disk (especially) getting the data at the end freed up. Since I didn't have too much free time I skipped the defrag, so just working on the big (now) empty space available before the MDF (blue) file.

Step two is to shut down the VM, then go to the settings and go to the disk settings and click edit. This will bring up the disk edit wizard and the keep the first selected option, Compact.

This is very cool because is actually loads the VHD as a disk on host operating system! In fact you can browse it and edit it (I would guess, but that may screw the compact up). This is similar to the VHDMount tool in Virtual Server. I am not sure how to do it manually in Hyper-V yet, because it would be really cool to be able to do that now.

After some time, let me rephrase that a significant amount of time, which in my case was a 6 odd hours, it finished compacting the VHD file to 60Gb less!

Random Tool Musings

First off is pptFlex which is an add-on for PowerPoint which provides a DeepZoom (those at the TechEd closing know what I mean) like experience with your slide deck. It's an Office Lab add-in so a prototype that will never see the main stream. After a touch of configuration (select backdrop, transition between slides, configuring sections) it appears to generate an XPS render of the slides with cool transitions but as XPS doesn't support animation that is the first (and biggest) loss in functionality when compared to normal PowerPoiunt. At the end of the day I doubt it will be shipped, but I think we may see these sort of transitions appearing in Office "13".

In my upcoming post on shrinking a VHD, I briefly mention that I reclaimed the disk space by emptying the SQL log file. That is a bad idea for most systems for many a reason beyond my non SQL guru mind, but if you are interested in how to do it anyway see the following article which is what I used my guidance: http://madhuottapalam.blogspot.com/2008/05/faq-how-to-truncate-and-shrink.html

Lastly there have been updates to some of my favorite tools, first from Microsoft namely StyleCop and FxCop which both are everyone. That’s very positive news especially since FxCop has been a bit stagnent but the news about another favorite tool, Reflector being taken over by RedGate (see here) does worry me. I do not think they will kill it or kill a free version but I am worried about two versions coming out (pay and free) with pay being all the features and new stuff and free being crippled or not getting enhancements. Only time will tell...

MOSS 2007: Can't upload multiple files and can't use explorer view

:) Summarized version: This is on Windows 2008. (told you it was five words)

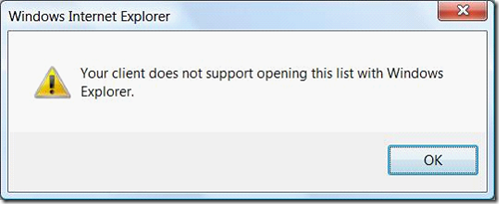

Anyway on this machine I wanted to upload a bunch of files a MOSS 2007 site, so I went to the action menu., file upload and… dum dum dum! The multiple file upload was gone! So I decided to switch to cool explorer view and drag the files in that way, nice quick and WRONG! It was broken too :(

Since I really didn't want to upload each file individually because it would take too long I needed to fix one of them, which began my usual problem solving process of try this, Google that, tweak this etc… but nothing worked :( After a while I started to think it was the server and not my machine, but no one else seemed to have an issue, so it must be my machine.

After more problem solving for my machine I stumbled over the problem came from the fact that because I was using Windows 2008 for Hyper-V… thus running 64bit. See in 64bit Windows there is two versions of Internet Explorer a 32bit and a 64bit version and I was running the 64bit version, changing to the 32bit version fixed the problem straight away.

Slide.Show and SharePoint: Part I - Configuring Slide.Show

This is a multi-part series. The other parts can be found at

- Slide.Show and SharePoint: Part II – Getting the data

- Slide.Show and SharePoint: Part III – Deploy and final config

This was inspired by a post on the IWSA group, about how to get a decent slide show in SharePoint using a picture library as a source. The poster went on to say he Slide.Show looked like it would meet his need and wondered if it could be used. For those who do not know Slide.Show is a cool Silverlight 1.0 component which takes a list of images and displays them as a slide show, with the presentation looking brilliant. Slide.Show has options like pausing, skipping the current image, viewing a grid of thumbnails so you select which one to skip to, and even a full screen view! It has in fact over 300 options you can tweak to get it just the way you like it!

/>If you don't know what SharePoint is, how is life under that rock? ;) SharePoint (or as it is officially known as Microsoft Office SharePoint Server) is a cool system too, it has all kinds of functions for document management. One of them is a picture library but the downside of the picture library when displayed normally is a boring list or if you configure it one static picture… *YAWN*. So there is a perfect harmony just waiting occur!

But how can you use Slide.Show together with SharePoint? I wish it was as straight forward to add a web part in, but it isn't. That said it is not difficult to get up and running and requires a few tricks and thinking outside the SharePoint box to get up and running.

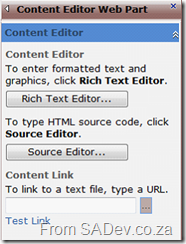

The first step is to add a Content Editor Web Part (CEWP), which can be found under the Miscellaneous section.

The CEWP is interesting in that it allows you to set the content using rich text (ala Microsoft Wordpad like options) or actually put the HTML directly into it using the Source Editor option. Being able to put HTML directly in does not mean just HTML, but means that you can actually put anything in it and have total power of what will be rendered! Since we will be using a lot of JavaScript this is exactly what we need to use, to get Slide.Show into SharePoint.

The first step in getting this working is to get Slide.Show setup, so once you have downloaded the source package and extracted it you need to run the release.bat file which will combine a lot of JS files into two files in a release folder. You then need to take the release folder and place it in a folder under your SharePoint web site, which is definitely the cleanest way to configure it, but I preferred to take the Slide.Show folder (part of the files extracted) and place it under the SharePoint web site as it allows me to run some tests to make it easier to get the system setup correctly as in the screen shot below.

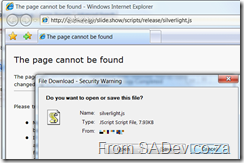

If you have also followed my thinking about taking the slightly messier config, you should be able fire up your favorite browser and type in http://<your website>/slide.show/samples/empty/default.html which should show you a pure black screen (right click and it should say Silverlight config). The point of that boring sample is that it just makes sure that the content is available, there is no server issues or client issues preventing it for working. Another simple test to check everything is configured correctly is to go to http://<your website>/slide.show/scripts/release/silverlight.js which should prompt to download it (like in the picture below).

Now that Slide.Show is configured correctly we will get back to the CEWP we now need to plop the code into it to make it work. Since the CEWP allows us to actually drop in any source, we will use that to put in the JavaScript we need for Slide.Show to work. This is where we lose the straight-forwardness of doing this, because if you thought you could follow the Slide.Show quick start guide and get it to work… you would be screwed. This is because we can't put in the <script> tags in the header of the page like the quick start guide tells us as SharePoint won’t let that happen. So our first step is to put our own code in to load and run the JS files and then we can then start using Slide.Show as normal.

To do this we first need a to tell the system that we will be running some JavaScript by a normal script tag:

Note: A complete version of the JavaScript is available at the end of the article without number to allow for easy copy and paste.

1: <script language="JavaScript">

Next we will use the XMLHttpRequest class to load the JS file synchronously. However if we just called XMLHttpRequest then this would only work in real web browsers (IE 7 and higher, Firefox etc…) but since so many people use older IE versions we need to cater for them by adding a bit of code like this (source of the next 8 lines is not my bubble like mind but Wikipedia):

1: // Provide the XMLHttpRequest class for IE 5.x-6.x:

2: if( typeof XMLHttpRequest == "undefined" ) XMLHttpRequest = function() {

3: try { return new ActiveXObject("Msxml2.XMLHTTP.6.0") } catch(e) {}

4: try { return new ActiveXObject("Msxml2.XMLHTTP.3.0") } catch(e) {}

5: try { return new ActiveXObject("Msxml2.XMLHTTP") } catch(e) {}

6: try { return new ActiveXObject("Microsoft.XMLHTTP") } catch(e) {}

7: throw new Error( "This browser does not support XMLHttpRequest." )

8: };

For the following example we will just load one file, but when we are done we will need to repeat this for all our JS files:

1: var silverlightRequest = new XMLHttpRequest();

2: silverlightRequest.open("GET", "/slide.show/Scripts/Release/Silverlight.js", false);

3: silverlightRequest.send(null);

NOTE: If you have put Slide.Show in a different location to me then you need to adjust the second line as needed.

The last line of loading the file is to call the eval function which will allows us to execute the JavaScript from the JS we retrieved. This enables the classes and methods will be available to us:

1: eval(silverlightRequest.responseText);

Once we have loaded both Slide.Show JS files, we can then use Slide.Show as normal by calling:

1: new SlideShow.Control();

1: </script>

Now click OK to close the HTML source code editor and OK (or apply) again for the web part and it should give us the same result as our first test, the empty sample. That’s kind of boring so to end off part one in this series lets modify the Slide.Show creation line to load a configuration file from one of the samples, and we will use the Flickr one as it requires nothing on the machine. The modified Slide.Show creation line with the configuration specified looks like:

1: new SlideShow.Control(new SlideShow.XmlConfigProvider({ url: "/slide.show/Samples/Flickr/configuration.xml" }));

The full JavaScript that we would place in the CEWP to get it this far would look like this:

<script language=

"JavaScript">

// Provide the XMLHttpRequest class for IE 5.x-6.x:

if( typeof XMLHttpRequest == "undefined" ) XMLHttpRequest = function() {

try { return new ActiveXObject("Msxml2.XMLHTTP.6.0") } catch(e) {}

try { return new ActiveXObject("Msxml2.XMLHTTP.3.0") } catch(e) {}

try { return new ActiveXObject("Msxml2.XMLHTTP") } catch(e) {}

try { return new ActiveXObject("Microsoft.XMLHTTP") } catch(e) {}

throw new Error( "This browser does not support XMLHttpRequest." )

};

var silverlightRequest = new XMLHttpRequest();

silverlightRequest.open( "GET", "/slide.show/Scripts/Release/Silverlight.js", false);

silverlightRequest.send(

null);

eval(silverlightRequest.responseText);

var slideshowRequest = new XMLHttpRequest();

slideshowRequest.open( "GET", "/slide.show/Scripts/Release/SlideShow.js", false);

slideshowRequest.send(

null);

eval(slideshowRequest.responseText);

new SlideShow.Control(new SlideShow.XmlConfigProvider({ url: "/slide.show/Samples/Flickr/Configuration.xml" }));

</script>

- Update – 25 Sept 2008: I have cleaned up this post a bit to make it easier to understand.

- Update – 26 Sept 2008: Added information/fix to get it to work on older IE versions.

Silverlight: Lessons Learnt

To check the video go to http://technet.microsoft.com/en-us/events/teched/cc561184.aspx

Complex Reporting: Part 5 - Wrap up

The next problem is the Export To option in SRS, where you can export to a variety of formats such as PDF, Excel etc… Having sub-reports within table cells is NOT supported by the Excel one, you just a gray block were the sub report would be. PDF, TIFF, and web archive all work fine. If your users are expect to export to Excel you need to rethink using this.

Lastly you have to make sure you set the width and height and lock the cells on the report and sub-reports to not expand and shrink. If you don’t get the width/height the same all over what happens is your cells don’t align between the report and sub-reports and it looks shocking.

Complex Reporting: Part 4 - Introducing Sub-Reports

So how do we use them? Well if we look back at our previous image where we had the fields scattered all over there is a distinct pattern here, basically there are 5 blocks (Q1, Q2, Q3, Q4 and Total) in a row under each fiscal year for each commodity/deal, and the horizontal total row at the end is basically the same.

What we can do is merge those five cells on the table together and insert a sub report into that merged cell, and since all the groups are the same they can all point to the same report. The exception is the horizontal total row, which is the same in look is calculated a little differently. So we only need two sub-reports and we would structure it as follows:

So how does the sub report know what to show? Well remember it’s a normal SRS so you can just parameters to it, and because it’s in a cell of a table you can just access the values from row that is being rendered. So all we need to do is pass two parameters, the fiscal year and the commodity.

Now the complexity is easy since it’s just a simple bit of SQL using the same UNION stuff as we used before:

SELECT f1q1value, f1q2value, f1q3value, f1q4value FROM Deals WHERE (deal = @projectid) AND (fiscal1 = @fiscal) UNION SELECT f2q1value, f2q2value, f2q3value, f2q4value FROM Deals WHERE (deal = @projectid) AND (fiscal2 = @fiscal) UNION SELECT f3q1value, f3q2value, f3q3value, f3q4value FROM Deals WHERE (deal = @projectid) AND (fiscal3 = @fiscal) UNION SELECT '0','0','0','0'

If you read that and saw the last SELECT and went, WHOA, good for spotting it. What’s happening is that I always want a result regardless, so that I don’t get issues caused by missing fields. So by adding that and only selecting the top record I ensure that there is always a value, even if it is zero. The total column on the sub report is just a calculated field adding the four values up.

For the total row sub report, it’s basically the exact same except we are now wrapping the fields in SUM’s, that’s the only change. The last thing to make sure of is that for the initial table on the main report you get all the commodities for all the periods. To do that your SQL needs to take into all the possibilities like so:

SELECT Deal FROM Deals WHERE ((fiscal1 = @FiscalYear) OR (fiscal2 = @FiscalYear) OR (fiscal3 = @FiscalYear) OR (CAST(RIGHT(fiscal2,2) AS Int) = CAST(RIGHT(@FiscalYear,2) AS Int)+1) OR (CAST(RIGHT(fiscal2,2) AS Int) = CAST(RIGHT(@FiscalYear,2) AS Int)+2) OR (CAST(RIGHT(fiscal3,2) AS Int) = CAST(RIGHT(@FiscalYear,2) AS Int)+1) OR (CAST(RIGHT(fiscal3,2) AS Int) = CAST(RIGHT(@FiscalYear,2) AS Int)+2)) ORDER BY Deal@FiscalYear is the name of our drop down we mentioned earlier and we use a little bit of SQL to get it into an INT and manipulate it to give us every possible combination.