SQL BI Boot Camp - Day 1: Afternoon Session

Reporting Services

After lunch we dived into the next topic I know (don’t worry I learn stuff later in the day), namely Reporting Services :) So we started with the PowerPoint again :| I am not repeating everything he said, since a lot is covered in my earlier post on reporting services.

First where are reports used:

- Internal reporting – operational & management

- External – to customers

- Embedded – Portals, Windows and Web

The lifecycle of report development is:

Reporting lifecycle – Once again no clever names (I should work on that).

Some notes on RS which I never knew: Data driven subscriptions are an enterprise edition only feature :( and drill through only enabled for reports rendered into html (wonder why PDF doesn’t support it).

Reporting Services Labs

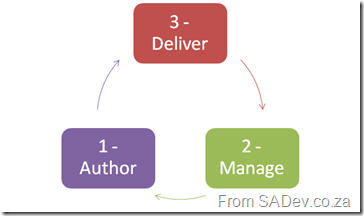

Right after the slides were put away we dived into the first lab… actually we didn’t because the report builder 2.0 software was NOT installed on the machines :( Skip over lab 1 and onto lab 2! Lab 2 involved creating a simple report with a chart control on it. It enjoyed this lab even though it was fairly simple.

The report from exercise 1. Note two charts but it is a single chart control. Thanks Dundas :)

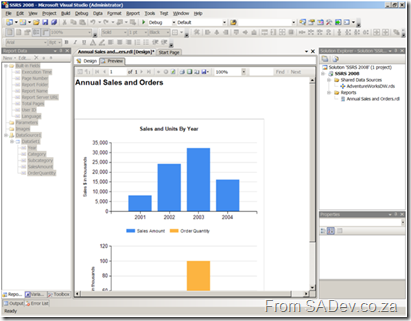

For exercise 2 we created a report with gauges:

The report from exercise 2.

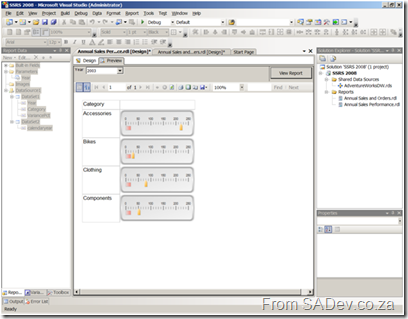

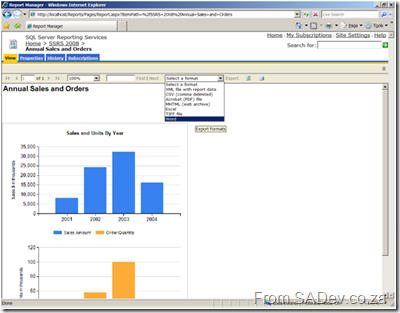

The third exercise involved publishing to a RS server from Visual Studio and then exporting to Word.

The report about to be exported in exercise 3.

All in all I found the RS labs very enjoyable if not even at the advertised level 200 for the course.

Introduction to UDM

With that out of the way we looked at UDM, which is the SQL 2005/2008 name for a cube! UDM stands for Unified Dimensional Model.

Uses of OLAP

- Sales analysis

- Forecasting and budgeting

- Financial reporting

- Web Statistics

- Survey Result

- ETL Process Analysis

SQL Server Analysis Services (SSAS) is made up of an OLAP component and a data mining component.

Basic data mining is also available for free in Excel (separate download).

Building a cube provides more functionality to users, enables high performance queries, abstracts multiple sources and encapsulates business rules.

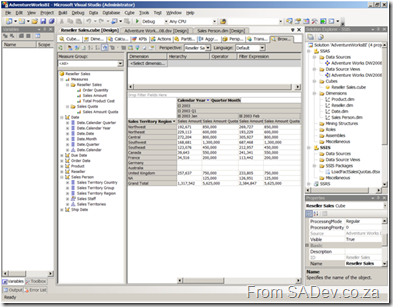

Now that the introduction is done it was on to the labs. The first lab was to build a cube! There seem to be WAY too many steps involved in doing this (maybe I am just a lazy developer) but it quickly became an exercise on following a manual and not really any understanding of what and why each step was being done. One of the comments I over heard was that it was a lot of work to build a pivot table, because that’s all we ended up with. I think that actually highlighted the lack of information about why we did things :S

Using the data browser in the cube we built.

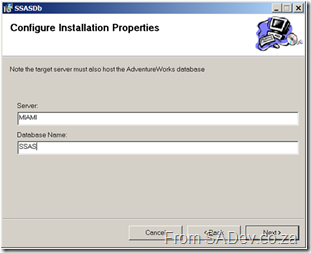

We then fired up Visual Basic :shock: to build an installer for for the cube so it could be installed on multiple servers easily. This was very interesting, mostly because I spent a lot of time in the past with MSI and never really got to grips with the advanced features (like embedding VB code).

My VB code! Actually it’s copy and paste from the snippets file.

Deploying my cube using the installer.

To do that we were given 75min and the manual said it expects us to complete lab 1 in 75 minutes (I did it in 71), but no extra time was allocated for lab 2 which was estimated 45 min, lab 3 or lab 4 which were estimated at 15min each. That meant unless you were super fast with this (i.e. you knew it) you couldn’t get to 75% of the labs :(

Introduction to MDX

After the cubes we moved into MDX which is a language similar to SQL but optimized for handling analysis of data in a cube. It’s specifications are either from OLEDB for OLAP (ODBO) or XML/A.

Since it is a language it is not a end user, or even power user, tool. It is a development tool and the skills needed to help learn it are:

- Good to have: SQL Skills, Excel Skills (Formulas)

- Not helpful: C# or ASP.NET

So what does it look like: SELECT <Dicers> FROM <Cube or sub query> WHERE <Slicer>

SQL vs. MDX

- In SQL the result is defined by columns which populate rows while in MDX it is defined by rows and columns and populating cells.

- In SQL the row headings are data while in MDX they are a schema.

- SQL aggregates dynamically into groups while in MDX data is referenced by cell sets.

What is a MDX Set: Grouping of items together. Uses standard set notation: { … }

You can add formatting (like currency information or thousand separators) to the result in the cells using the CELL FORMATING statement.

Tuple – A list of members from different hierarchies separated by commas. Pronounced: Too-ple like quadruple. These are what goes in the <Dicers> and <Slicers> section of the example above.

Imagine the following 3 tables

Towns:

- CT

- JHB

Values:

- SALES

- COS

Sales People:

- JIM

- JACK

You could built the following tuples:

- All sales for CT: (ct,sales) or (sales,ct) <- Order does not matter.

- All sales for JHB and CT: (ct,sales)+(jhb,sales)

- All JIM’s sales in CT and all JACK’s sales in JHB: (ct,sales,jim)+(jhb,sales,jack)

- Note that would exclude all JIM’s sales in JHB and all JACK’s sales in CT.

Types of tuples:

- Complete tuple: references every dimension

- Partial tuple: omits some dimension. Will always use current member if dimension is omitted.

In MDX tuples have functions like: Percentage of parent, prevmember, parallelperiod and children.

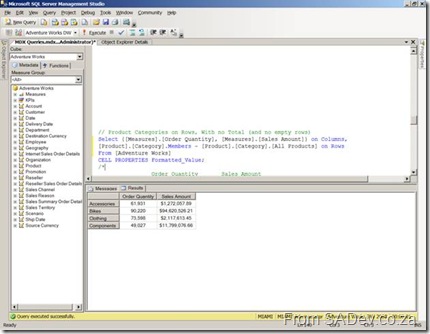

Now that we have looked at MDX we hit the lab which was very refreshing as they provided about 10 broken MDX queries and you had to apply your knowledge (and skills with the help file) to fix each one. This was a really good lab since it actually enabled you to learn and understand what you were doing. It was a little frustrating at first because it’s different but more of the labs should’ve been this way. In the end I got some right and some I just got stuck on and had to peek at the answers to see how to fix them.

One of the MDX queries I fixed.

By now it was after 6pm and time to check-in and head for dinner and drinks.

SQL BI Boot Camp - Day 1: Morning Session

Introduction

So after the drive to Emerald Casino (which took half the time I expected) and registration we started. Frikkie Bosch, marketing manager for app products (BizTalk, SQL, VS), from Microsoft is acting as facilitator for the boot camp! The actual presenter for the days is Kevin Coestzee from IS Partners. The room (pictured below) is big with space for approx. 80 people and Frikkie introduced all the companies attending at the start (lots of big names, including Microsoft staff!) and then Kevin took over and stated that the aim of the course and a brief overview of the SQL Server 2008 BI stack. It would be a level 200 course and some sections catering for business users (about 40% of the attendees) and some sections for technical (about 60% of the attendees).

The training room. This would fill up when everyone eventually arrived.

The PowerPoint section

Now that the stage is set lets look at what was covered in the mornings PowerPoint session:

First up what is BI: Taking knowledge, aggregating data and providing it to business.

BI’s use can be to understand the health of the organisation, collaborate the shared view of business drivers and reduce decision time and these are facilitated through four scenarios:

- Operational reporting

- Activity management

- Data Mart – analytics & decision making

- Data warehouse

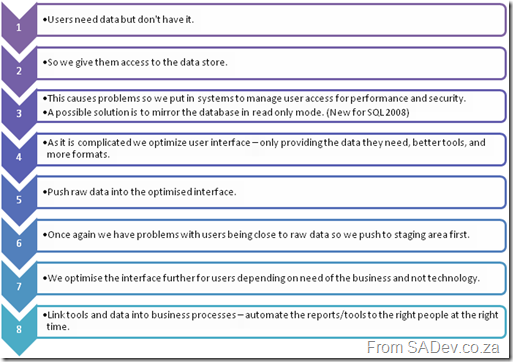

What is the BI evolution:

BI Evolution – I couldn’t come up with names for each stage.

What is a star schema design:

- It is a fact table with a number of linked dimensional tables. Fact tables do not link to other fact tables

A star schema.

- A dimensional table is list of unique dimensions (people, time periods, stores) with a primary key and meta data on the items. They link and support numeric data.

- A fact table combines all dimensional table primary keys with the collection of measurements associated with a business final result of process. For instance the final amounts of a sale, or salary payments. There are two ways to design it:

1) Columns layout – An individual column for each type (salary, sale etc…). Very wide layout with many columns which could be empty.

2) Single value column with a foreign key to a dimension table to designate type (salary, sale, etc…). Very narrow layout but very long and no empties.

The fact table will be the biggest table in the DB.

- This design is good for BI because

- It allows for high performance queries.

- It is designed for models.

- It is low maintenance

To me it seems similar to normalized database model.

What is a snowflake schema design:

It is a normalised version of the star schema where the dimensional table may be related to one or more other dimensional tables. Useful for hierarchy designs, like company organisation charts.

Date Dimensional Table

- Most common dimensional table created as it is used in almost every system.

- Date information is consistent across fact tables.

- Useful common attributes for the dimensional table include: year, quarter, month, day

Parent Child Dimensional Tables

There are tables which are self referring. So one column contains the primary key, while another a foreign key to the first column. Good for organizational charts, where all employees in a single table and the foreign key points to the employees manager.

Slowly Changing Dimensions

The version control system of BI ;) So they support data warehouses by providing information on the past accurately. It is a concept introduced by Ralph Kimball and is used to track changes over changes time (employee sales when they move from store 1 to store 2 etc…).

There are three types:

- Type 1: No tracking.

- Type 2: Full versioning

- Type 3: Partial versioning (current + 1 or 2 back) – Exceptionally seldom used

Type 2 is done by having a start and end date columns for the record which define when it was created and when it was superseded by a new version. If the end date is null then it is current. When a new row is inserted the old data is copied across from the old row, the changes applied and the end date set on the old row.

Type 3 is done by adding a column for the fields to be tracking to the same row and coping the old data to that column before updating the column.

What is Data Integration?

- Transform corporate data into information.

- Enterprises spend 60-80% of their BI resources in the data integration stage.

The Labs

And that ended the marathon PowerPoint slide deck! :) Now on to the first two labs focusing on SSIS, which I already know well, but I am here so I dived into them because you never know what you will find. Each lab is broken into a number of exercises and the first lab (Introduction to ETL techniques using SSIS) is made up of four exercises. Exercises 1 through 3 focused on creating a SSIS package which retrieves csv files from an FTP server and stores them locally. Those are filtered by date using a variable and then unpivot the CSV data and lookup data from dimensions and load all of it into a fact table. I really enjoyed this part, even though it is the “simple” lab is is very cool, especially since you get to read from FTP and do some parsing. The section on unpivots and derived columns (including the horrid expression that is needed) could’ve had more information and the lab should have had a way to see the data before and after.

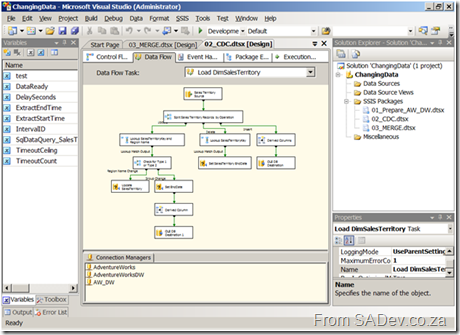

The control flow view of the first lab.

Exercise 4 was about investigating the slowly changing dimension transform while the entire lab 2 was to manage changing data using change data capture or the MERGE statement. Unfortunately both exercise 4 and lab 2 were to me useless. The involved opening a prebuilt solution and going through each of the parts, opening the components and reviewing the settings. Normally no information on why each setting was set the way it was. There were sporadic helpings in the manual but they seemed to wane the closer to the end of the lab you got. This was especially bad for MERGE (which is new to SQL 2008, and I only know that because I read it this week in prep for a session on what’s new in SQL 2008 I am giving) which really needs more information on the use because it is so powerful. These parts really were the “nice to feature x is there” but they were more of a demo without a presenter than a lab.

One of the data flow views from the second lab.

Missed PDC2008?

If you, like almost everyone else (almost = everyone less Eben + Ahmed), did not attend PDC2008 well then why not swing by my office and grab the 60Gb worth of movies from it! Martin Woodward sent us a drive (arrived today) with them on, saving us from a fight with IT over bandwidth usage. There are 202 videos available! So why not host your own mini-PDC!

OMG! The event you just have to attend!

Call it a Christmas present, call it what ever you like but the guys at S.A. Developer have a session on the 8th of December with none other than Scott Hanselman! Make a plan, bribe, call in sick, do what it takes but you owe it to yourself to be there! For all the details see Craig’s Post.

Thanks Rudi for the heads up.

SQL Server BI Boot Camp

This week Thursday and Friday you will be able to find me at Emerald Casino! No, I haven’t given up my cycling addiction for a gambling one (although in yesterdays 94.7 race at the 70km mark I did think about it). Reality is more boring than that I am attending a two day boot camp on SQL BI! The camp covers areas I know well like Integration and Reporting services and areas I don’t know well like analysis services and performance point. I am looking forward to it and presenting a condensed, focused version on my return to the rest of BB&D (and possible S.A. Architect).

If you are attending also let me know via the comments so we can hook up for drinks on the Thursday night! Otherwise keep an eye on the blog for regular postings during the event!

Give a little, get a lot

In the spirit of Christmas it’s time for giving and S.A. Architect is hoping you will give 3 minutes of your time to them. As I posted before Willy is leaving to join the Rangers team at Microsoft. This means that one of our community leads, if not THE community lead, will be in the midst of Microsoft evangelizing and high lighting issues which are specific to our region. However for him to be able to know what WE need, he needs you to tell him! Think of this as a rare opportunity to get your views into Microsoft! So please click HERE and complete the survey! For more details on the survey see Willy’s post.

For my co-workers at BB&D the same survey is available internally on the KB, so you have no reason not to complete it.

Clean landing page

I mentioned that your SharePoint site should be clean and simple and have search on it. Well what does that mean visually? Trust Michael O’ Donovan to provide the perfect screen shot in his last post, well actually not in his last post but in the attachments on his last post:

This is clean (duh), it’s easy to use (people can find the content easily), search is there (and has focus because it’s in the centre) and it’s familiar (remind you of any search engines).

SharePoint Search Tips and Tricks

I thought I would share some tips and tricks for improving the search experience with SharePoint:

| In any company you will have people of different backgrounds and skills using SharePoint, and one of the first issues is that search isn’t fine grained enough, and that users either don’t know or don’t feel comfortable with advanced search features to get it fine grained. To make “normal” search easier just add Faceted Search. If you are interested in what that is go and check out the site. Next improve usage by lighting up SharePoint search to the browsers. Well what does that mean? It means that when you go to a website with a modern browser it “detects” the search functionality and allows you to add it to the build in search functions in your browser, so you can search the SharePoint site from your browser without even going to it first! It does this using an open standard called Open Search. To do this you first need to define an XML file which tells the browser what to do. Example: Really simple, basically just the name and the encoding. The magic is handled by replacing the tag in the URL ({searchTerms}) with what the user is searching for. That file needs to be uploaded to a location on the SharePoint site where it can be read by users. The next step is exposing it to the browsers, to do this you just need to add a line to the header tag in your master page: 1: <link rel="search" type="application/opensearchdescription+xml" href="/search/searchdefination.xml" title="BB&D Portal" /> Now the browsers will see the tag and light up the search facility! This is really helpful for improving adoption of search. Next up it would be great to search multiple locations and you can thanks to a feature in SP 1 called federated search. Where your search query actually calls other web sites for results and places them in a special section of the site, defined by a web part. As I am personally interested in a lot of technologies I think it would be great to have federated search to: Wikipedia, Linux.Com, Java.Sun.Com, MSDN.Microsoft.Com and TechNet.Microsoft.Com, like shown on the left. What you may notice is that MSDN already has support for federated search, but the rest don’t! So how do you get around that? Well Live.Com also does has support for federated search and it also has support for limiting results to a specific site. So all that is needed to do is to create a search provider configuration for live.com and limit it to the specified website. You can download the sample providers I created below: |

|

The last tip is to implement a very clean landing page for the site with a search box on it. As the new landing is cleaner and smaller than the it meant the initial feeling of SharePoint is it is that it is quicker and more responsive and so it also will improve adoption. To get the search box to search properly using just a tiny bit of HTML + JavaScript which looks like this (assuming you have a textbox with ID called query), this will create the button:

1: <input type="button" width="100px" height="" value="Search" onclick="window.location='/pages/SearchResults.aspx?k='+document.getElementById('query').value+'&s=All%20Sites';">

Willy blasting off!

On Friday Willy publically announced his plans for 2009, which include him leaving South Africa to go and work for the big blue monster (aka Microsoft) in the rangers team! Before I get into my view on this, let me explain what the rangers are: A MVP (which Willy is) is selected from the community by the community and big blue as a expert/lead/guide in their field. They are the best of the best outside of Microsoft. The rangers are the internal version of MVP’s. They are the best of the best inside of Microsoft. It is a great honor and privilege and shows really how big an expert Willy is.

In short I am so happy for him and really jealous too, but in the short period I have worked with him I have not even scratched the surface of what he knows… so I am sad that I won’t get that on a day to day basis.

To Willy I wish you the best of luck and enjoyment in this new experience!

DTS to SSIS

I presented a high level session on SQL Server Integration Services (SSIS) this week with the focus on what’s new in 2008, and when and where to use SSIS. Less focus was given to the how because how is easy with SSIS but I did do 30min+ of live demo’s so that showed the high level how as well. SSIS is in it’s second version (2005 being v1) because before that in SQL 2000 and before DTS existed and I was asked during the session on how to migrate from DTS to SSIS. I stupidly mentioned that there was a third party tool but no Microsoft one, because in 2005 there wasn’t and the what’s new in 2008 doesn’t mention anything (1 + 1 = assumption… and you know where that gets you).

Skip forward two days and guess what Zayd the “Server Dude” finds by accident… a TechNet article on how to migrate. Not only showing the third part option but the NEW built in option in SQL Server 2008 which I said didn’t exist! Anyway if you are trying to move from DTS to SSIS then you have to check it out at http://technet.microsoft.com/en-us/library/cc768544.aspx