Why I like Visual Studio 2010? Undock Windows

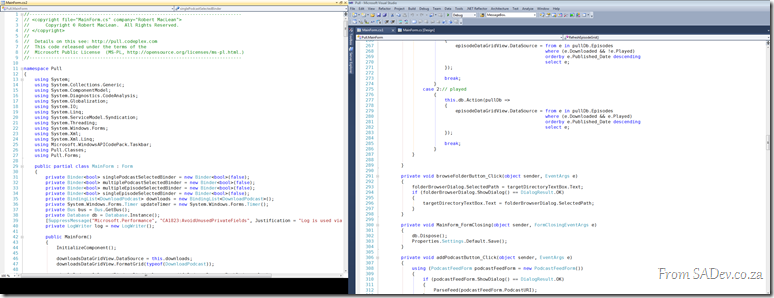

![]() Visual Studio 2010 has the ability to undock code windows by clicking and dragging on the code window tabs. This is a great feature and it is often touted for multi-monitor setups where you may want to have the form on one window and code on another window, or maybe two different code files open at the same time.

Visual Studio 2010 has the ability to undock code windows by clicking and dragging on the code window tabs. This is a great feature and it is often touted for multi-monitor setups where you may want to have the form on one window and code on another window, or maybe two different code files open at the same time.

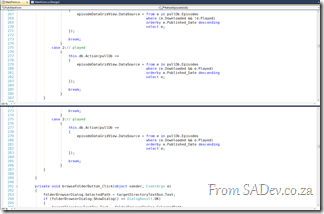

That is very useful, however that is not why I like this feature. I like the ability to undock the windows because it lets me view two different parts of the same file at the same time. Visual Studio 2010 and earlier have supported this using the split view option (![]() ) but that means you lose half of your screen (like below).

) but that means you lose half of your screen (like below).

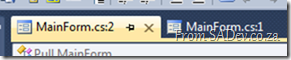

Using the undock windows in 2010 you can use the Windows –> New Window option to duplicate the current window.

Then you can drag the second (or third, or forth) windows out and get a full screen side by side view which is GREAT for comparing code.

Cannot open VS project if Blend is used?

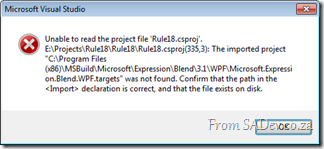

I ran into an issue recently after reinstalling my laptop, where I couldn’t open a C# (WPF) project in Visual Studio 2010. Every time I tried it just grimaced at me and said:

I ran into an issue recently after reinstalling my laptop, where I couldn’t open a C# (WPF) project in Visual Studio 2010. Every time I tried it just grimaced at me and said:

Unable to read the project file 'Rule18.csproj'.

E:\Projects\Rule18\Rule18\Rule18.csproj(335,3): The imported project "C:\Program Files (x86)\MSBuild\Microsoft\Expression\Blend\3.1\WPF\Microsoft.Expression.Blend.WPF.targets" was not found. Confirm that the path in the <Import> declaration is correct, and that the file exists on disk.

Part of the cause is that previously I had Blend installed, and I had used it on this project to do some fairly complex things, in particular Font Embedding. However since reinstalling I had not installed Blend again.

This is caused the build target file not to be installed, and that caused the error.

How do you get around this?

The best solution is to install the FREE Blend SDK because that will put the build targets on the machine. Links for the SDKs:

- Blend 4 .NET SDK: http://www.microsoft.com/downloads/details.aspx?displaylang=en&FamilyID=75e13d71-7c53-4382-9592-6c07c6a00207

- Blend 4 Silverlight SDK: http://www.microsoft.com/downloads/details.aspx?displaylang=en&FamilyID=d197f51a-de07-4edf-9cba-1f1b4a22110d

However I didn’t have time to do this, so as a temporary solution (and definitely not a recommended solution): I opened the project file, and right near the bottom I found the Import for CSharp and Blend. I removed the Blend one, saved and reloaded and worked.

VirtualBox UUID already in use

I am playing around with using VirtualBox to run virtual machines and I ran into an issue trying to use a clone (copy) of a VDI file. The VDI file is the hard disk drive and it has a unique identifier in it it (UUID) and so trying to use a clone of one gives you the error: UUID of the hard disk is already exist in the media registry.

To solve it you need to run the following command to change the UUID of the file:

VBoxManage.exe internalcommands sethduuid [VDI file]

Example:

"c:\Program Files\Oracle\VirtualBox\VBoxManage.exe" internalcommands sethduuid

AppFabric.vdi

Announcing new sponsor for Visual Studio and TFS hands on lab event: Devexpress

I’m sure you know that in a week 50 talented and interested people will be getting together for a day of FREE hands on labs focused on Visual Studio 2010 and TFS 2010! If you didn’t know, have a look here.

I’m sure you know that in a week 50 talented and interested people will be getting together for a day of FREE hands on labs focused on Visual Studio 2010 and TFS 2010! If you didn’t know, have a look here.

Today I am very proud to announce we have another sponsor for the event – Devexpress! Devexpress are makers of some FANTASTIC addon’s for Visual Studio and development.

So what are they sponsoring? TWO licenses for their Visual Studio productivity add-on: CodeRush!

These two licenses will be given away as prizes to two lucky people at the event (you must be there to win).

ALM in 2010

Here is the slides from my talk on Application Lifecycle Management in 2010 which I did for the Information Worker user group.

Here is the blurb for the session

You can download the slides and the white paper from the IW site.Development is not just about writing code and pressing compile. It is a process, which starts long before code is written and extends long after the compile is done and this process includes a lot of management - this process is called Application Lifecycle Management (ALM).

SharePoint development is not immune to this and this presentation looks at what the landscape and tools are like for ALM in 2010 and how it relates to SharePoint development.

HTTP Status Codes Cheat Sheet

Today’s poster is for the variety of HTTP status codes which exist. Not only is there the 34 original HTTP v1.0 ones and the 6 new ones that came in with 1.1. There is also another 10 extensions from official RFC’s, and two special case ones. That is 52 in total, and it isn’t easy to remember what they are all are – so this poster is a cheat sheet to help with that.

It is also related to my other REST cheat sheet, since good HTTP status code usage is important for good REST services.

You can download the high resolution XPS file below.

"How RESTful are your services?" - The quick reference poster

Today’s quick reference poster looks at REST services and gives a guide to how RESTful (or not) your services are, from level 0 (not restful) to level 4 (using everything). These are not a scientific levels, it is just a simple classification I devised to promote easy communication about the services. The idea for this is to promote thinking about what features to use and assist with communication in a team. It is not a a requirement to always have level 4, some times you just need level 1 and this fine – do not over engineer for the sake of saying you are level 99.

If you are in into classification of REST services, have a look at http://www.innoq.com/blog/st/2010/07/rest_litmus_test_for_web_frame.html

Download the high resolution XPS file below.

Proven Practices in Threading Quick Reference Poster

This poster is based off my talk on .NET Threading, which I wrote about the entire process about the creation of that talk (see here). This poster takes the key proven practices in the talk and groups them into six themes for easy consumption.

High resolution XPS version below.

Free Visual Studio 2010 and TFS 2010 training!

Update 13 July 2010: The event is fully booked now! If you want to add yourself to the waiting list in case a spot becomes free, please contact me.

![trainingpic[1] trainingpic[1]](https://www.sadev.co.za/files/trainingpic1_thumb.png) I am very excited to announce that Zayd Kara, fellow Visual Studio ALM MVP, and myself have arranged a free hands on labs training for Visual Studio 2010 and TFS 2010. This is your opportunity to get some time learning about the new features in these products.

I am very excited to announce that Zayd Kara, fellow Visual Studio ALM MVP, and myself have arranged a free hands on labs training for Visual Studio 2010 and TFS 2010. This is your opportunity to get some time learning about the new features in these products.

The event will take place on Saturday, 31st July 2010 and will run from 9am to 1:30pm and is completely free, but it is limited to 50 people!

You will be able to do the following labs:

- Authoring and Running Manual Tests using Microsoft Test Manager 2010

- Branching and Merging Visualization with Team Foundation Server 2010

- Code Discovery using the Architecture Tools in Visual Studio 2010 Ultimate

- Debugging with IntelliTrace using Visual Studio 2010 Ultimate

- Introduction to Coded UI Tests with Visual Studio 2010 Ultimate

- Introduction to Test Case Management with Microsoft Test Manager 2010

- Planning your Projects with Team Foundation Server 2010

- Understanding Class Coupling with Visual Studio 2010 Ultimate

- Using the Architecture Explorer in Visual Studio 2010 Ultimate to Analyze Your Code

Click here for more details and registration form.

I would also like to take this opportunity to thank our sponsors for making this possible:

What's new in Visual Studio 2010 quick reference poster

One of my favourite aspects of my work at BB&D, is the creation of quick reference posters and cheat sheets for the DRP site which is one of BB&D’s way of sharing knowledge outside the organisation. I have recently produced a bunch of new posters which I will be releasing over the next few days. First up is…

What’s new in Visual Studio 2010

A quick reference post which explains the edition changes, some new features (IntelliTrace and the architecture tools) and gives some hints about the IDE (docking of windows, search shortcuts, block selection and zooming).

You can download the high definition XPS file below.