Presentation Dump - Mid 2010: VS2010, NDepend, RESTful Design, SSIS, EntLib 5, .NET Reflection, AppFabric, BDD, Sikuli & Redmine

The past 6 months have been hectic from a presentation perspective for me, with 20 presentations and classes given this year so far. So instead of a single dump of presentations at the end of the year, as I did last year, I am doing a mid-year dump.

What is new in Visual Studio 2010

This presentation is the one I have given many times this year. It originally started as 10 on 10, which looked at 10 features in 20min for Dev4Dev’s last year. It then evolved into 12 on 10, which added two more features, still in 20min. It then evolved into ?? on 10 for 6 degrees of code where it became an hour and half presentation. It is demo heavy and really the slides are the very basics – the important is hidden slides and notes for the demos.

NDepend

The tool that keeps on giving! For people working with taking over customer sites, reviewing code or anything else where you need to deal with other peoples code this tool is a must. This presentation was given to the architecture team at BB&D.

RESTful Design

RESTful design is an evolution of an earlier presentation I did, REST & JSON, which drops the JSON stuff completely and also drops the heavy compare with SOAP/WS* parts which seemed to cause confusion. This revised presentation covers just REST and looks at it much more practically by covering the required HTTP knowledge and patterns for designing RESTful services.

SQL Server Integration Services

Another upgrade in 2010 of an earlier presentation which not only cleans up some aspects but also goes into a lot more detail.

Enterprise Library

“The presentation that never was”, often I will spend time researching a technology or trend and preparing the presentation to come to the conclusion that it is just not worth the time of the attendee’s. Enterprise Library 5 is one of those, as the presentation covered what is new in it, and that is not very much.

.NET Reflection

This one is actually one from last year, but I had problems getting it onto SlideShare so it is only showing up now.

Windows Server AppFabric

AppFabric, the local one – not the Azure one, was a great presentation I did for the BB&D architecture team. This is not the original presentation – it has been edited to remove customer info as a lot of analogies between a project BB&D did and AppFabric was in it (cause who hasn’t built a system similar to AppFabric).

BDD

One of the presentations I spent the most time on this year, and one of the most exciting presentations. It really is a great methodology and I would love to see it used more.

Sikuli

Another presentation which did not make the cut to actually be presented. It is an interesting project, but of limited scope and when compared to the Coded UI from Visual Studio 2010 it is really far behind.

Redmine

Redmine is a bug tracking system, and being it’s not TFS may surprise you that an ALM MVP would do training on it. However for me to do training meant I head to learn it, which means I know the ALM landscape better and can point out which is better or not without uneducated bias (btw it still is TFS :)). This training was aimed not at developers but at call centre/power user people who would log initial bugs to then be managed in the system – so it was more of a ticketing system than a bug system in the end.

TFS Integration Platform: Why the custom adapter format?

Note: This is my personal opinion and not a view of Microsoft.

The TFS Integration Platform includes the ability to create and use custom adapters, however these are done using a custom adapter format. A question asked by many people, including myself, is why a custom adapter format?

Microsoft has a few solutions in the adapter space already which could’ve been used:

- SSIS: SQL Server Integration Services, Microsoft’s platform for ETL has the ability to build adapters which can read and/or write.

- WCF LOB Adapters: Microsoft has a very complete framework for building LOB adapters which can be used by custom code or products like BizTalk.

- BizTalk adapters: Native adapters for Microsoft BizTalk server.

So why reinvent the wheel? There are three compelling reasons I see for it:

Lightsaber vs. Gun

A weapon from a more civilised age, the lightsaber is a weapon with a number of purposes:

- Deflecting laser shots

- Melting doors

- Cutting off snow monster arms

- Slicing open the belly of a tauntaun.

and it does all those well.

A gun is completely different, it is a single minded point and click interface for killing and sometimes it is better to just get the job done as Indiana shows us:

The other adapter templates are like lightsabers in that they are designed to deal with a variety of situations and so flexibility is key. They have the concept of data in/data out and do not care about the data is. The TFS Integration Platform adapters are like a gun, they are built precisely for a single purpose and so do that exceptionally well.

The Scotty Factor

Star Trek is about a group which has research and discovery vessels flying round space and dealing with what is encountered. The ships in Star Trek are not designed for warfare1, but they do a lot of fighting. Why is it then that these peaceful ships can fight, because it is a reality of the situations they encounter, that they will need to be able to defend themselves.

Knowing ahead the common scenarios that can arise, means that you can prepare better and the TFS Integration Platform is an indication of that. There is a lot of knowledge of work items and version control built into the platform, even some assumptions which are not true for all systems, and that means as a developer you write less code in your adapter to get it to work and it also means the platform is optimised for the job at hand.

All you need is a paper clip, a rubber band and a sugar cube

A paper clip is meant to hold multiple pieces of paper together – that is it’s job. Introduce McGuyver and the edge case usage of a paper clip being able to control low orbiting satellites comes into play, however he will need a few other things to hack it together. When you work with a generic migration/integration system, those edge cases are very difficult to cater for. The TFS Integration Platform handles edge cases related to the task at hand very well because they aren’t edge cases – they are normal expected cases.

- There is one ship purpose built for fighting, the Defiant.

What Office taught me about Visual Studio? A very cool toolbox trick.

I spend a significant amount of time in PowerPoint and to get a constant look and feel in my slides, I often use the Format Painter option. Using this tool you highlight some text/image/video, click the Format Painter button and then paint some other text/image/video and it applies the format settings from the first group to the group you paint.

What if you want to do this over and over? You may think you need to highlight → click → paint over and over again, but Office has a trick – double click the button! Then you can highlight → double click → paint → paint → paint

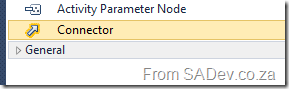

So how does this help in Visual Studio? I am working with the UML diagrams at the moment and needed to connect a bunch of items and the first few times I did the following: Click the connector in the toolbox → click source item → click destination, repeat from start.

Suddenly I thought, maybe it works like Office and you know what? It does! You can double click a toolbox item, and then it becomes sticky and adding lots of them is easy!

How to create an adapter for the TFS Integration Platform - Appendix 2: SimpleDictionary

Note: This post is part of a series and you can find the rest of the parts in the series index.

For my WI adapter I needed an implementation of Dictionary<T,V> which could be serialised and unfortunately the .NET one can’t. So I threw together a simple implementation of one using two List<T>. It is not perfect for every possible time you may need an alternative to Dictionary<T,V>, for example the only item manipulation I have is to add an item and clear all items, but it is great for my needs in the case:

[XmlRoot("simpleDictionary")]

public class SimpleDictionary<Key, Value> : IEnumerable, IXmlSerializable

{

private List<Key> keys = new List<Key>();

private List<Value> values = new List<Value>();

public List<Key> Keys

{

get

{

return keys;

}

}

public List<Value> Values

{

get

{

return values;

}

}

public IEnumerator GetEnumerator()

{

return (IEnumerator)new SimpleDictionaryEnumerator(this);

}

public void Add(Key key, Value value)

{

keys.Add(key);

values.Add(value);

}

public void Add(object o)

{

KeyValuePair<Key, Value>? keyValuePair = o as KeyValuePair<Key, Value>?;

if (keyValuePair != null)

{

this.Add(keyValuePair.Value.Key, keyValuePair.Value.Value);

}

}

public void Clear()

{

keys.Clear();

values.Clear();

}

#endregion

private class SimpleDictionaryEnumerator : IEnumerator

{

private SimpleDictionary<Key, Value> simpleDictionary;

private int index = -1;

public SimpleDictionaryEnumerator(SimpleDictionary<Key, Value> simpleDictionary)

{

this.simpleDictionary = simpleDictionary;

}

#region IEnumerator Members

public object Current

{

get

{

return new KeyValuePair<Key, Value>(simpleDictionary.keys[index], simpleDictionary.values[index]);

}

}

public bool MoveNext()

{

index++;

return !(index >= simpleDictionary.keys.Count);

}

public void Reset()

{

index = -1;

}

}

public System.Xml.Schema.XmlSchema GetSchema()

{

return null;

}

public void ReadXml(XmlReader reader)

{

if (reader.IsEmptyElement)

{

return;

}

XmlSerializer keySerialiser = new XmlSerializer(typeof(Key));

XmlSerializer valueSerialiser = new XmlSerializer(typeof(Value));

reader.Read();

while (reader.NodeType != System.Xml.XmlNodeType.EndElement)

{

reader.ReadStartElement("keyValuePair");

reader.ReadStartElement("key");

Key key = (Key)keySerialiser.Deserialize(reader);

reader.ReadEndElement();

reader.ReadStartElement("value");

Value value = (Value)valueSerialiser.Deserialize(reader);

reader.ReadEndElement();

this.Add(key, value);

reader.ReadEndElement(); // for keyvaluepair

reader.MoveToContent();

}

reader.ReadEndElement(); // for root

}

public void WriteXml(XmlWriter writer)

{

XmlSerializer keySerialiser = new XmlSerializer(typeof(Key));

XmlSerializer valueSerialiser = new XmlSerializer(typeof(Value));

for (int counter = 0; counter < this.keys.Count; counter++)

{

writer.WriteStartElement("keyValuePair");

writer.WriteStartElement("key");

keySerialiser.Serialize(writer, this.keys[counter]);

writer.WriteEndElement();

writer.WriteStartElement("value");

valueSerialiser.Serialize(writer, this.values[counter]);

writer.WriteEndElement();

writer.WriteEndElement();

}

}

}Pro tips for the Visual Studio Pro Power Tools

The team has release a new version of the tools which solve a lot of the issues, and provide a bunch more great features. Please make sure you see and use the latest version.

At Tech·Ed North America on Monday the Visual Studio Pro Power Tools were announced, and they are fantastic. A full run down of them can be found on Brian Harry’s blog. However there are some tips in the usage which may not be immediately obvious so this post will explain some additional tips.

Add Reference Dialog

The new dialog is amazing and fits the UI experience of VS2010 way better than the out of the box one. One point to be aware of is that when you hit Add that it does not close the dialog - it does mark the selected assembly and in the background it adds it. This is great for adding multiple assemblies. If you want to remove an assembly you can do it here too – just select an already added assembly (green tick) and the Add button becomes a Remove button.

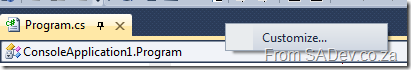

Document Tab Well

This is one of the first things you will see, because the tabs are a variety of colours suddenly. How can you control this? Go to the Tools menu –> Options –> Environment –> Document Tab Well to customise the settings. Setting the preset to Visual Studio 2010 is the same as disabling it.

As a side point, if you are thinking of disabling it – before you do disable it, try the Dynamic Tab Well preset. It is very similar to the standard VS2010 tabs, but with more features added and should appeal without over killing it.

The shortcut to get to that part of help is right click on any blank space in the tab well and click Customize…

Highlight Current Line

Another one that is immediately obvious – the grey behind your current line of code. If this colour is not aligned to your colour scheme then you can tweak it using the Tools menu –> Options –> Environment –> Fonts & Colours –> Display Items –> Current Line (Extension).

To “disable” it set the background to Automatic.

Align Assignments

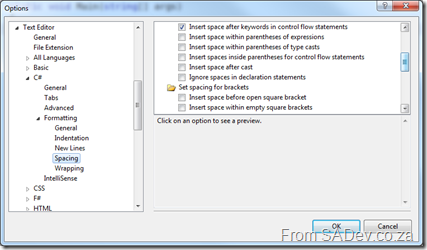

If this is not working for you, it could because your C# settings are stopping it. To , you must uncheck: Tools menu –> Options –> Text Editor –> C# –> Formatting –> Spacing –> Ignore spaces in declaration statements

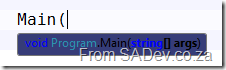

Colourised Parameter Help

Don’t like the the colours of the new tool tips? Want them to match your theme?

You can do this via Tools menu –> Options –> Environment –> Fonts & Colours –> Display Items –> Signature Help Tooltip Background

Important to not that the foreground colour cannot be changed, only the background colour.

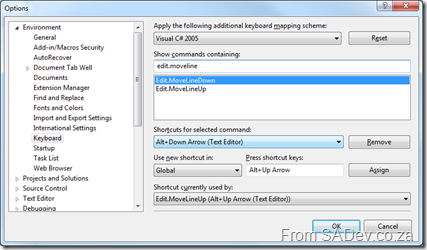

Move Line Up/Down Commands

Did you have something already on the Alt+↑ or Alt+↓ or maybe you want to bind to something else? You can do this in Tool (menu) –> Options –> Environment –> Keyboard. The commands are:

- Edit.MoveLineDown

- Edit.MoveLineUp

The Hack

Should you really dislike an extension or a specific extension is conflicting (tab well for instance conflicts with another tab management extension available) there is a completely unsupported hack to remove a specific feature while keeping other features on. Note that you will need to do this for EVERY update and it is unsupported, so do not bother to ask for help. It may also cause side effects to Visual Studio which cannot be foreseen. In short you had better be desperate.

Hack #1

The VSIX file is just a ZIP file, which you can open in your favourite archive tool. From there you can see the assemblies for each extension and remove them if needed.

Hack #2

When an extension is installed it is unpacked to C:\Users\<Username>\AppData\Local\Microsoft\VisualStudio\10.0\Extensions\ in here you will find directories for the various authors and under those the extensions. To find the Pro Power Tools go to: \Microsoft\Visual Studio 2010 Pro Power Tools\10.0.100525.1000 and you will see all the files, simply delete or move out the assembly of the feature you do not want that will disable it.

How to create an adapter for the TFS Integration Platform - Appendix 1: Power Tips

Note: This post is part of a series and you can find the rest of the parts in the series index.

Throughout the series I have share a bunch of power tips for making this easier and this is a quick cheat sheet of them all.

From Part II: Getting Started

- Power Tip: Once you have completed the tools install, go into to SQL Server and backup the TFSIntegrationPlatform database immediately. There are not only a few odd bugs that roam around which may cause you to need it but if you want to test on a clean environment, a restore is quicker than a reinstall.

- Power Tip: Make a common root for the TFS code and yours (in my case I used RangersCode) and then create sub directories in there for platform and your code (so I had My Production and MS production folders under RangersCode). This helps keep the items close, which makes things easier later plus keeps them separate so you can identify them.

From Part III: Overview of adapters

- Power Tip: The TraceManager puts all information written to it in the log files, so please make sure you do not put any sensitive information in there.

From Part IV: IProvider

- Power Tip: Using Visual Studio 2010’s new “Generation from usage” features makes this stage of development much easier.

From Part V: Items (IMigrationItem & IMigrationItemSerializer)

- Power Tip: VC stands for Version Control. This refers to an adapter that works with the source control aspects of the system. WI, work items, and WIT, work item tracking, are the same thing. File attachments in WI are NOT regarded as VC and must be handled by your WI adapter.

- Power Tip: When you are downloading files in the IMigrationItem, you are responsible for the creation of the path too. So make sure you are creating directories and also checking what directories exist too.

From Part VIII: IMigrationProvider

- Power Tip: In my implementation I used the very useful Path.GetTempFileName() from the .NET framework to get a place to put the file. However this causes an empty temp file to be created automatically and the platform doesn’t like that, so I needed to delete the temp file after that, and then call Download.

- Power Tip: For folders creation or for deletes of files/folders you can use the Path property of the action to get the folder name.

From Part IX: IServerPathTranslationService

- Power Tip: The neutral path, or canonical path as it is correctly named, is a path that is “Unix like”, (I.e. /src/project/). However these do not follow all the same rules as true Unix paths. For example : is a valid character in the path.

How to create an adapter for the TFS Integration Platform - Part X: Reflection on SharePoint

Note: This post is part of a series and you can find the rest of the parts in the series index.

This post is not a technically heavy, like the most of the series, but more a reflection on what I learnt about integrating to SharePoint. This information will hold true for any type of work with SharePoint, not just if you are creating adapters.

What I used

For the WIT adapter I used on the lists.asmx web service, which allows you to work with list items. While with the VC adapter I used both the lists.asmx and copy.asmx web service and I ended up using some WebDav methods too. You may be asking why I needed lists.asmx with VC – because of SharePoint Rule #1 “Everything is a list” – even a document library. The copy web service allows for files to be uploaded to SharePoint.

My goal was to use just web services, which are one of the three ways you can interact with SharePoint the other two being WebDav and the API. The API is better than web services in every aspect (it is faster and more feature complete) but has a serious limitation, you MUST run the application using the API on the same server as SharePoint is install on. It makes it really only useful for tools used by SharePoint admins or web components, like web parts. WebDav is a standard for talking to web services and is generally regarded as a poorer implementation compared to the web services because it does much less.

In the end I had a bug with file deletion in the VC adapter when using the web services. After much fighting, I gave up and used WebDav for that one function.

What I learnt

If I rewrote these adapters I would use mostly WebDav and only use the lists.asmx web service for meta information tasks rather than manipulation. This is because while the WebDav implementation does less, it does all the fundamentals (create, update, delete) and it does it faster and more reliable way than the list and copy web services. The lists.asmx web service would be used only for getting item ID’s, lists of items, files and folders and maybe renaming since WebDav can’t rename. This would allow me to drop the copy web service would give me faster adapters and cleaner code in less time.

How to create an adapter for the TFS Integration Platform - Part IX: IServerPathTranslationService

Note: This post is part of a series and you can find the rest of the parts in the series index.

IServerPathTranslationService takes the path to your source control item and translates it into a platform neutral path and visa versa. An example for this is if you were moving files between Windows and Linux. On Windows your path may be c:\RangersCode\My Production\ while on Linux that path needs to become/src/RangersCode/My Production/ to handle the differences you need to change it to a neutral path first.

The amount of sleep that I lost on path translation is embarrassing because the concept is dead simple, applying it correctly is ridiculously hard. The de facto guide for how this should work can be found on Willy-Peter’s blog, however there is also an update based on a lot of question asking by me which you may want to read.

This is only needed for VC adapters so if you just want a WI adapter you can skip this.

Power Tip: The neutral path, or canonical path as it is correctly named, is a path that is “Unix like”, (I.e. /src/project/). However these do not follow all the same rules as true Unix paths. For example : is a valid character in the path.

The two methods you need to implement are:

TranslateToCanonicalPathCaseSensitive

This method requires you provide a neutral path for one of your paths. For me this is simply just putting a leading slash on the item’s URL:

public string TranslateToCanonicalPathCaseSensitive(string serverPath)

{

TraceManager.TraceInformation("WSSVC:TranslationToCanonical - {0}", serverPath);

string localPath = string.Format(CultureInfo.CurrentCulture, "/{0}", serverPath);

TraceManager.TraceInformation("WSSVC:New:{0} -> {1}", serverPath, localPath);

return localPath;

}

TranslateFromCanonicalPath

This method is the reverse from TranslateToCanonicalPathCaseSensitive it takes a neutral path and provides one that applies to your adapter. In my case it meant dropping the first character and making sure I had an absolute URI:

public string TranslateFromCanonicalPath(string canonicalPath, string canonicalFilterPath)

{

TraceManager.TraceInformation("WSSVC:TranslationFromCanonical - {0} - {1}", canonicalPath, canonicalFilterPath);

string result = new Uri(canonicalPath.Substring(1)).AbsoluteUri;

TraceManager.TraceInformation("WSSVC:TranslationFromCanonical:Result {0}", result);

return result;

}

First in the Platform’s Eyes

There is an interesting thing that the platform does when it comes to server path translation, it creates this class first. This is before anything else, like the configuration service, so you need to make sure this class relies on very little, if any ,outside information. During the creation it also takes the root path from the filter items in your configuration and passes it to TranslateToCanonicalPathCaseSensitive to get the root neutral path. It needs to know this because it will want to strip this information out when it passes it to the other adapter and add it back when other adapters pass their paths to you.

How to create an adapter for the TFS Integration Platform - Part VIII: IMigrationProvider

Note: This post is part of a series and you can find the rest of the parts in the series index.

The IMigrationProvider interface is the sister to IAnalysisProvider and handles the writing to your system. As with IAnalysisProvider it include some methods you can ignore.

InitializeServices

As with IAnalysisProvider the InitializeServices method is what is called first and is used for all setup. In my implementation I do a lot of setup for SharePoint which may not apply to other implementations. One thing you must do though, is register your item serialiser with the platform as follows:

changeGroupService.RegisterDefaultSourceSerilizer(new SharePointVCMigrationItemSerializer());

ProcessChangeGroup

The ProcessChangeGroup method is the most important method of IMigrationProvider as it is called to do the write operation. You are provided a ChangeGroup and the Actions property of that ChangeGroup contains each file/folder/item you need to write/update/delete to your system. The ProcessChangeGroup needs to return a log of what has happened so that the platform knows all the actions were performed and also so it can correctly tie up item unique ID’s in your system with the other system unique item ID’s. The log is done with a ConversionResult

ConversionResult conversionResult = new ConversionResult(configurationService.MigrationPeer, configurationService.SourceId);

Each action has an Action which tells you what you need to do with the item, be it an update or add or delete etc…

Each action also has an ItemTypeReferenceName which tells you what it is. For WIT this is not too important as you will be dealing with work items, but for VC this very important as it could be a concrete item file or folder or a more theoretical item like a branch or merge instruction.

So you need to loop over all the actions and based on the action + type do the correct thing:

foreach (MigrationAction action in changeGroup.Actions)

{

if (action.Action == WellKnownChangeActionId.Add || action.Action == WellKnownChangeActionId.Edit)

{

if (action.ItemTypeReferenceName == WellKnownContentType.VersionControlledFile.ReferenceName)

{

Once you have completed your action you need to add the information to the conversion result log. My two adapters do this very differently for no reason other than I wrote the VC adapter much earlier and when I write the WI adapter later I did a more refined generic implementation there. The key part of the adding the information though is below. The most important thing is to provide the ID (the third parameter) to the platform so it knows how to link your item in future which is needed for deletes and updates.

conversionResult.ItemConversionHistory.Add(new ItemConversionHistory(sourceSystemId, string.Empty, newSharePointId.ToString(), string.Empty));

Items and VC

With the VC adapter the way you request the actual file you want is using the Download method on the source item and providing a path.

Power Tip: In my implementation I used the very useful Path.GetTempFileName() from the .NET framework to get a place to put the file. However this causes an empty temp file to be created automatically and the platform doesn’t like that, so I needed to delete the temp file after that, and then call Download.

Power Tip: For folders creation or for deletes of files/folders you can use the Path property of the action to get the folder name.

Items and WIT

WIT is easier when it comes to writing because you do not need to worry about paths and files - you just need to do some XML parsing. All the information about the item will be provided to you in the MigrationActionDescription property of the action and you need to parse that into an item. Since the mapping of field names is handled by the platform this is very simple. In my case the following small method was all I needed to build a list of fields:

private static Dictionary<string, object> BuildFieldList(IMigrationAction action)

{

Dictionary<string, object> fields = new Dictionary<string, object>();

XmlNodeList columns = action.MigrationActionDescription.SelectNodes("/WorkItemChanges/Columns/Column");

foreach (XmlNode columnData in columns)

{

string fieldValue = columnData.FirstChild.InnerText;

string fieldName = columnData.Attributes["ReferenceName"].Value;

if (string.IsNullOrEmpty(fieldName) == false)

{

fields.Add(fieldName, fieldValue);

}

}

return fields;

}

Item ID

The platform handles the mapping of item ID’s too, so if you need to know what item needs to be updated or deleted it can be found in the MigrationActionDescription. The following method will work regardless of what your system is:

private string GetSharePointID(IMigrationAction action)

{

TraceManager.TraceInformation("WSSWIT:MP:GetSharePointID");

XmlNode workItemChangesNode = action.MigrationActionDescription.SelectSingleNode("/WorkItemChanges");

string value = string.Empty;

if (workItemChangesNode.Attributes["TargetWorkItemID"] == null)

{

TraceManager.TraceInformation("WSSWIT:MP:GetSharePointID:Cannot find work item id. XML is: {0}", workItemChangesNode.OuterXml);

}

else

{

value = workItemChangesNode.Attributes["TargetWorkItemID"].Value;

TraceManager.TraceInformation("WSSWIT:MP:GetSharePointID:Value {0}", value);

}

return value;

}How to create an adapter for the TFS Integration Platform - Part VII: WIT Conflict Handling

Note: This post is part of a series and you can find the rest of the parts in the series index.

The WIT adapter needs a custom conflict type and a custom conflict handler, really for no reason other than the platform expects it.

Conflict Handler

If you have no reason for a custom conflict handler, a simple implementation which allows for manual resolution can be created, which is what I have below.

public class SharePointWITGeneralConflictHandler : IConflictHandler

{

public bool CanResolve(MigrationConflict conflict, ConflictResolutionRule rule)

{

return ConflictTypeHandled.ScopeInterpreter.IsInScope(conflict.ScopeHint, rule.ApplicabilityScope);

}

public ConflictResolutionResult Resolve(MigrationConflict conflict, ConflictResolutionRule rule, out List<MigrationAction> actions)

{

actions = null;

if (rule.ActionRefNameGuid.Equals(new ManualConflictResolutionAction().ReferenceName))

{

return ManualResolve(out actions);

}

return new ConflictResolutionResult(false, ConflictResolutionType.Other);

}

public ConflictType ConflictTypeHandled

{

get;

set;

}

private static ConflictResolutionResult ManualResolve(out List<MigrationAction> actions)

{

actions = null;

return new ConflictResolutionResult(true, ConflictResolutionType.Other);

}

}

Conflict Type

If you have no reason for a custom conflict type, you can do what I did which is to re-implement the generic one with even less features namely only supporting ManualConflictResolution and a very simple scope hint.

public class SharePointWITGeneralConflictType : ConflictType

{

public static MigrationConflict CreateConflict(Exception exception)

{

return new MigrationConflict(

new SharePointWITGeneralConflictType(),

MigrationConflict.Status.Unresolved,

exception.ToString(),

CreateScopeHint(Guid.NewGuid().ToString()));

}

public static MigrationConflict CreateConflict(Exception exception, IMigrationAction conflictedAction)

{

return new SharePointWITGeneralConflictType().CreateConflict(exception.ToString(), CreateScopeHint(Guid.NewGuid().ToString()), conflictedAction);

}

public override Guid ReferenceName

{

get

{

return s_conflictTypeReferenceName;

}

}

public override string FriendlyName

{

get

{

return s_conflictTypeFriendlyName;

}

}

public override string Description

{

get

{

return s_conflictTypeDescription;

}

}

public SharePointWITGeneralConflictType()

: base(new SharePointWITGeneralConflictHandler())

{ }

public static string CreateScopeHint(string sourceItemId)

{

return string.Format(CultureInfo.CurrentCulture, "/{0}/{1}", sourceItemId, Guid.NewGuid().ToString());

}

protected override void RegisterDefaultSupportedResolutionActions()

{

AddSupportedResolutionAction(new ManualConflictResolutionAction());

}

private static readonly Guid s_conflictTypeReferenceName = new Guid("{606531DF-231A-496B-9996-50F239481988}");

private const string s_conflictTypeFriendlyName = "TFS WIT general conflict type";

private const string s_conflictTypeDescription =

"This conflict is detected when an unknown exception is thrown during Work Item data submission.";

}