Learning Kotlin: Named Arguments

- Code for the 3rd Koan can be found here.

- This is the 3rd post in a multipart series.

If you want to read more, see our series index

On to our third exercise and definitely, the difficulty curve has lowered again (or should it be steep) as we have a simple lesson - how to use named arguments.

Not only is an example for it provided for how named arguments work:

fun usage() {

// named arguments

bar(1, b = false)

}

We also get an example of default values for arguments

fun bar(i: Int, s: String = "", b: Boolean = true) {}

The problem we need to solve itself is simple too,

Print out the collection contents surrounded by curly braces using the library function 'joinToString'. Specify only 'prefix' and 'postfix' arguments.

Which has this answer:

fun task2(collection: Collection): String {

return collection.joinToString(prefix = "{", postfix = "}")

}

Not much to add to this lesson unfortunately.

Learning Kotlin: Java To Kotlin Converter

The second Koan is meant as an exercise of the IDE and is targeted at those moving from Java, as such this was a mixed bag for me.

The goal is to get a Kotlin version of Java code by letting the IDE do the conversion, but I decided to tackle it myself.

Initially, I thought I needed to do the entire file and not just the task function. So, what does the entire code look like when changed?

package i_introduction._1_Java_To_Kotlin_Converter;

import util.JavaCode;

import java.util.Collection;

import java.util.Iterator;

public class JavaCode1 extends JavaCode {

public String task1(Collection<Integer> collection) {

StringBuilder sb = new StringBuilder();

sb.append("{");

Iterator<Integer> iterator = collection.iterator();

while (iterator.hasNext()) {

Integer element = iterator.next();

sb.append(element);

if (iterator.hasNext()) {

sb.append(", ");

}

}

sb.append("}");

return sb.toString();

}

}

package i_introduction._1_Java_To_Kotlin_Converter;

import util.JavaCode;

import kotlin.collections.Collection;

class JavaCode1 : JavaCode() {

fun task1(collection : Collection<Int>) : String {

val sb = StringBuilder()

sb.append("{")

val iterator = collection.iterator()

while (iterator.hasNext()) {

val element = iterator.next()

sb.append(element)

if (iterator.hasNext()) {

sb.append(", ")

}

}

sb.append("}")

return sb.toString()

}

}

The first thing you'll notice is that the java.util.Collection is gone since Kotlin has its' own implementation and is imported by default.

Next is the code's lack of Visibility Modifiers. This is because everything is public by default. In addition to public there are protected, private and internal which work the same .NETs modifiers.

The next change is the variables, you don't need to define the type before the variable... you just define val for read-only variables or var for mutable variables. This is similar to C#s var keyword.

The final change is the lack of semicolons. This doesn't mean Kotlin doesn't have them, it means they are optional. You can add them as if it was Java or C# with no issue, it is you don't need them unless you are doing something confusing for the compiler.

SFTPK: Red/Black Trees

This post is one in a series of stuff formally trained programmers know – the rest of the series can be found in the series index.

Building off of the Binary Search Tree, we get the red/black tree which aims to solve the problem that a BST can become unbalanced.

The short version of a red/black tree is that is a BST with a set of rules that help us keep it performing well.

Thinking back to the BST, when we are doing the inserts and deletes at some point we need to rebalance the tree to ensure we keep that sweet O(log n) performance for searching. When is the right time to do that rebalancing?

A red/black tree extends a BST by adding one bit of information to each node; for those keeping track, our node now has the data, a key and a flag.

The flag is either red/black and there are 7 rules a BST (there are 5 official ones that relate to the colours, the first 5 below, but there are two more for the BST itself):

- Each node is either red or black

- The root node is black

- All leaves are black. These leaves can be the null points off of a node or they can be explicit nodes.

- A red node can only have black children

- Any path from a node to the leaves contains the same number of black nodes

- New nodes added are always Red.

- If the depth of a path is more than twice that of the shorted path we need to do a rotation.

So with these rules, insert & delete get more complex because you need to check these rules and, if the rules do not work you start to correct the issue. What is great with this is that because of the rules you become really efficient at correcting the problems.

So let's look at a really simple BST:

Next, let's make it a red/black tree following our rules above. I am also going to add the leave nodes in this image to help explain it but will take them out the remaining ones.

Note:

- that from the root node you need to go through 1 black node to reach a leaf node regardless of path.

- All red nodes only have black children.

- A black node can have black children.

Now we are going to add a node with value 11. It is added in the correct place and as a red node.

However 9 is red and has a red child, so we need to trigger a repaint of it to black. That causes a new issue, that from the root node to the leaves you may go through 1 black node by going left or 2 black nodes by going right, so we need to paint the parent of 9 (i.e. 7) to red. Lastly, since 7 is now red, 6 must be repainted to black. Finally, we have a correct red/black tree again.

Lastly let us add 10, which goes under 11. Immediately we note that the length from 5 to the leaf of 10 is 4 steps, while the shortest path (the left side) is 2. We know we need to do a rotation.

The rotation is easy, we take the child of the longer side (i.e. 7) and make it root and make the original root (i.e. 5) a child of it. Since 7 has two children already (5 and 9), it's original child 6 moves to under 5. Next, we just trigger a repaint, starting with the fact 7 needs to be black and you end up with the following:

This might seem really expensive to do, but since you are just changing pointers and only a few the performance of the insert becomes also O(log n).

Implementations

Unfortunately, neither Java, .NET nor JavaScript has out of the box implementations but there are plenty of ones available if you search for it.

Learning Kotlin: Hello World

- The code being referenced.

- This is the 1st post in a multipart series.

If you want to read more, see our series index

So let's start with line one: package i_introduction._0_Hello_World

In Kotlin package is just the same as a package in Java or Namespaces in .NET. There is no link between package name and the filesystem.

Next up is imports:

import util.TODO

import util.doc0

Imports are the same as import in Java and Using in C#. Kotlin does import a number of packages by default:

- kotlin.*

- kotlin.annotation.*

- kotlin.collections.*

- kotlin.comparisons.* (since 1.1)

- kotlin.io.*

- kotlin.ranges.*

- kotlin.sequences.*

- kotlin.text.*

Next up is our first function defination fun todoTask0(): Nothing = ...

We use the fun keyword to state it is a function, followed by the function name todoTask0 and the parameters... in this case, that is empty.

The function returns Nothing though that isn't specifically required as the compiler can infer the return type.

This is a single statement function so it ends with an equal sign.

The next function is a little different

fun task0(): String {

return todoTask0()

}

The second function returns a String and is not a single statement function.

So how do we solve this Koan?

fun task0(): String {

return "OK"

}

Learning Kotlin: Introduction

I have recently decided to start to learn Kotlin, and have started with the Koans.

Koans are simple unit tests which help ease you into learning a new language.

The first step was setting this up, in Windows and VSCode... cause for some reason I hate myself that much.

Requirements

- Install Java SDK

- Install the Java Extension Pack for VSCode

- Install the Kotlin Language Extension for VSCode

Using

So I am using VSCode as the editor and then the command line to run the unit tests.

Parts

Since this will be ongoing, I am going to break it into a number of parts, listed below (this list will be updated over time):

- Hello World

- Java To Kotlin Converter

- Named Arguments

- Default Arguments

- Lambdas

- String Templates

- Data Classes

- Nullable Types

- Smart Casting

- Extension Functions and Extensions On Collections

- Object Expressions and SAM Conversions

- Kotlin's Elvis Operator

- Return when

- The awesome that is the backtick

- Collections

- It is a thing

- Operators

- Operators don't need to mean one thing

- Destructuring

- For Loop

- Invoke

- Looking at the In Operator & Contains

- The .. operator

- Todo

- By

- The lazy delegate

- The Observable Delegate (with a slight detour on reference functions)

- The map delegate

- The vetoable delegate

- The notnull delegate and lateinit

Quick tip: Column display

When you work with delimited data (CSV, TSV etc...) it can be a pain to just see the data in a nice way, for example, this data:

cat people-example.csv.txt First Name,Last Name,Country,age "Bob","Smith","United States",24 "Alice","Williams","Canada",23 "Malcolm","Jone","England",22 "Felix","Brown","USA",23 "Alex","Cooper","Poland",23 "Tod","Campbell","United States",22 "Derek","Ward","Switzerland",25

With Unix like OSs, you can use the column command to format the layout; for example:

column -t -s',' people-example.csv.txt First Name Last Name Country age "Bob" "Smith" "United States" 24 "Alice" "Williams" "Canada" 23 "Malcolm" "Jone" "England" 22 "Felix" "Brown" "USA" 23 "Alex" "Cooper" "Poland" 23 "Tod" "Campbell" "United States" 22 "Derek" "Ward" "Switzerland" 25

With Windows, you can use Import-CSV and Format-Table in PowerShell:

Import-Csv .\people-example.csv.txt | Format-Table

First Name Last Name Country age

Bob Smith United States 24 Alice Williams Canada 23 Malcolm Jone England 22 Felix Brown USA 23 Alex Cooper Poland 23 Tod Campbell United States 22 Derek Ward Switzerland 25

SFTPK: Binary Search Tree

This post is one in a series of stuff formally trained programmers know – the rest of the series can be found in the series index.

Binary Search Tree

In the previous post, we covered a Binary Tree, which is about the shape of storing the data. The Binary Search Tree (BST) is a further enhancement to that structure.

The first important change is that the data we are storing needs a key; if we have a basic type like a string or number then the value itself can be the key and if we have a more complex class, then we need to define a key in that structure or we need to build a unique key for each item.

The second change is a way to compare those keys which is crucial for the performance of the data structure. Numbers are easiest since we can easily compare which is larger and smaller.

The third and final change is the way we store the items; the left node's key will always be smaller than the parent nodes key and the right node's key will be larger than the parent node.

As an example, here is a BST using just numbers as keys:

{height=300}

{height=300}

Note that all nodes to the left are smaller than their parent and all parents above that.

Why?

So, why should we care about a BST? We should care because searching is really performant in it as each time you move a depth down, you eliminate approximately 50% of the potential nodes.

So, for example, if we wanted to find the item in our example with the key 66, we could start at the root (50) and move right. At that point, we have eliminated 8 possible nodes immediately. The next is to the left from the node with the 70 (total possible nodes removed 12). Next is to the right of the node with the value of 65, and then to 66 to the left of 67. So we found the node with 5 steps.

Going to Big O Notation, this means we achieved a performance of close to O(log n). It is possible to have a worst case of O(n), when the tree is not Optimal or Unbalanced.

Balanced versus Unbalanced

In the above example we have a binary search tree which is Optimal, i.e. it has the lowest depth needed. Below we can see a totally valid BST; Each child node is to the right of the parent because it is bigger than the parent.

{height=300}

{height=300}

This, however, will result in a O(n) search performance which is not ideal. The solution is to rebalance the BST and for our example above we can end up with multiple end states (I'll show two below). The key takeaway is that we go from a depth of 5 to a depth of 3.

{height=300}

{height=300}

#Implementations .NET has a built-in implementation with SortedDictionary. Unfortunately, nothing exists out of the box for this in JavaScript or Java.

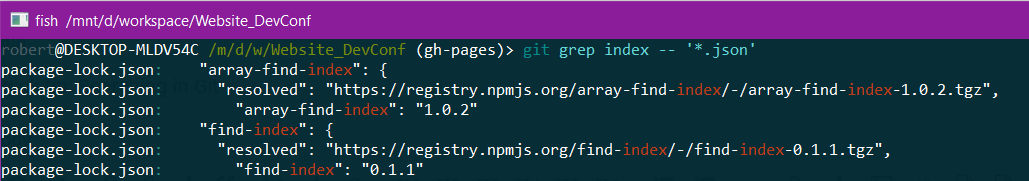

Quick tip: Grep in Git

This quick tip is about two small features of Git I wish I had known about earlier as it makes it way easier to do searching through it.

git-grep

git-grep is a way to search through your tracked files for whatever you provide. For example, if we want all files with the word index in it: git grep index

We can limit to specific files, for example, if we want to filter the above example to just JSON files: git grep index -- '*.json'

We can search for multiple items in a single file, for example, if we want to find all files with index and model in it: git grep --all-match -e index -e model

git-log grep

git-log has a grep function too which is awesome for finding commit messages with a specific word or words in it. For example, if I want to find all commits about Speakers for DevConf I could do: git log --all --grep "Speaker"

Drupal Geshi Cheatsheet

Since redoing this blog, I switched out the syntax highlighting to use the Drupal Geshi Module.

For the love of everything I can't remember the tricks for using it, so here is a cheatsheet; mostly for myself but maybe you get value too. These are all HTML attributes you add can to your code block.

- language this controls the language for rendering.

-

line_numbering controls if line numbering is off, on or fancy with the values

off,normalandfancyrespectively.- With fancy line numbers you can use the attribute interval to control how often to show the line numbers.

- title adds a title to the code block.

- special_lines takes a comma-separated list of numbers and highlights them.

- linenumbers_start controls what the first line number is.

Information worked out from this code.

Quick tip: The handy command line calculator

*[WSL]: Windows Subsystem for Linux

Who needs a GUI to do math when we have options for Unix like (MacOS, Linux etc...), Command Prompt, and PowerShell?

#Unix like OSs {#commandlinecalculatorunix} Unix OSs, including MacOS, WSL & Linux, include an awesome calculator called BC. From the man page:

bc is a language that supports arbitrary precision numbers with interactive execution of statements. There are some similarities in the syntax to the C programming language. A standard math library is available by command line option. If requested, the math library is defined before processing any files. bc starts by processing code from all the files listed on the command line in the order listed. After all the files have been processed, bc reads from the standard input. All code is executed as it is read.

The only cavet to use, is the file input; you can't just pass in parameters... but you can use echo to pass in the equation. For example:

echo '1 + 2 + 3 + 4' | bc 10

bc can also work with different number bases, for example:

echo "obase=2; ibase=10; 42" | bc 101010

obase stands for output base & ibase stands for input base. So in the example, we are turning 42 (base 10) to binary.

Floating point division is a weirdness with bc. For example, you would expect the answer to be 0.4 below but it is 0:

echo "2/5" | bc 0

The solution is to use the math library switch -l:

echo "2/5" | bc -l .40000000000000000000

and if 20 point position, you can use scale to control it:

echo "scale=3; 2/5" | bc -l .400

Windows Command Prompt {#commandlinecalculatorcmd}

Command prompt has a similar tool with the set command.

set /a 3+3 6 set /a (3+3)*3 18 set /a "203>>3" 25

PowerShell {#commandlinecalculatorps}

PowerShell natively supports some basic functionality, but if you want to use more advanced functionality you can use the entire System.Math class to do a lot of functionality.

4+5 9 6*7 42 [Math]::Sin(50) -0,262374853703929 [Math]::Max([Math]::Tan(40), [Math]::Cos(40)) -0,666938061652262